16 Jun Peer assessment with tool reduces workload for tutors

You can imagine that assessing and commenting on the work of 91 students creates a considerable workload. In the hope of taking away some of that workload, the Hasselt University experimented with Comproved’s comparing tool. By combining peer assessment using the tool with a traditional assessment by the lecturer, the workload was reduced by up to 60%. This is how they went about it.

A group of 91 second bachelor students in physical therapy were given the following assignment at the end of the academic year:

- They had to formulate a clinical research question based on their experience as physiotherapists;

- Then they had to look for a relevant scientific article and formulate an answer to the research question on the basis of the article;

- Finally, they had to evaluate the article and indicate the strengths and weaknesses of the study.

Normally, all these papers are evaluated by one or two lecturers by giving them a ‘pass’ or ‘fail’ grade and providing feedback. This way of assessment, of course, leads to a considerable workload. Especially when more than one assignment per student has to be assessed.

Experimenting with pairwise comparisons

This time, inspired by a presentation on the Comproved tool, the teacher decided to conduct an experiment. Initially, he was a little sceptical about the tool, but the possibilities were tempting enough to change his mind. In the experiment, students had to grade and comment on each other’s papers using pairwise comparisons in the tool. In addition, the lecturers judged in their traditional way. Finally, the students’ assessments could be compared to those of the lecturers.

Based on the pairwise comparisons made by the students, the tool calculated the Scale Separation Reliability (SSR). The SSR was .80 and can be considered very reliable. To achieve that degree of reliability, the 91 students had made a total of 910 comparisons. In other words, each paper was compared 20 times.

Students assess almost as good as tutors

The feedback given by students was of a high quality. A survey conducted by the students supported this statement. The students experienced the peer feedback from the tool as relevant, fair and legitimate. Because almost every assessor gave feedback on almost every piece of work they had to compare, every student received feedback from 15 to 20 peers. Students found this to be an added value of the comparing tool.

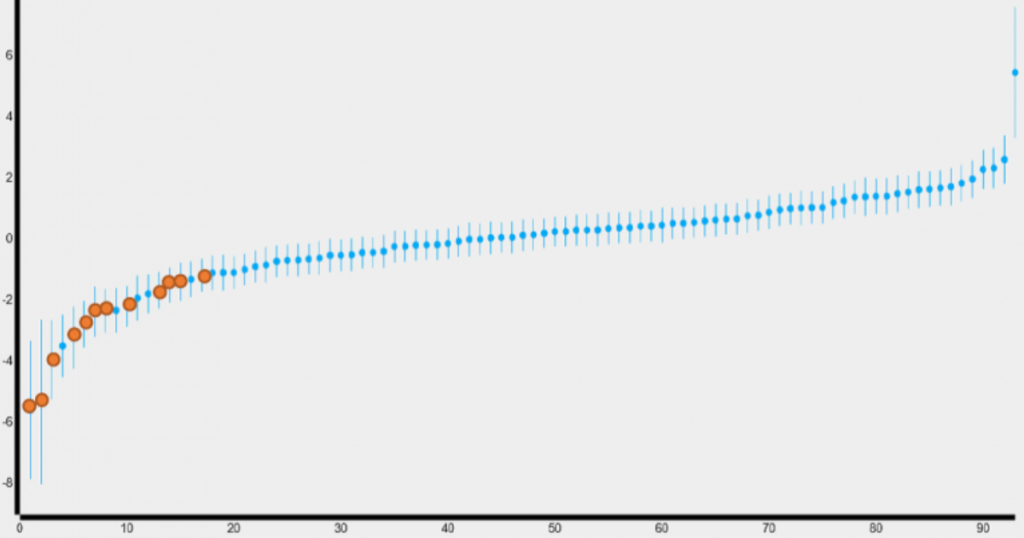

If we compare the results of the peer assessment with the lecturers’ pass/fail decisions, we see a great similarity. As the image below shows, 12 students received a fail mark from the lecturer (red dots) and they are all on the left side of the rank order that resulted from the students’ peer assessments. We can conclude that, by using pairwise comparison, students can assess their peers’ papers as well as the lecturers in their traditional way.

However, as you can see, some blue dots remain on the left side. This means that students considered them to be of poor quality, while the lecturers considered them to be passed. Therefore, in the next academic year, the lecturer will check the 40% lowest-ranked papers to see whether they have passed or not.

This combination of peer assessment and a final check by the tutor reduces the tutor’s workload by at least 60%. Moreover, the quality of the assessments and feedback is guaranteed.

Want to know how the comparing tool works exactly? Find more info here or contact us with your specific question!