28 Feb Assessing videos in an easy way

Different types of media such as video, audio, or images are increasingly used for the assessment of students’ competences. However, as they allow for a large variation in performance between students, the process of grading is rather difficult. An online comparing tool can make assessing videos easier.

Comproved is such a tool in which students can easily upload their work in any media type (text, audio, image, video). Then, the tool puts together random pairs of products and presents them to the assessors. The only task for assessors is to choose which one of the two is best, using their own expertise. Assessors find it easy to make such comparative judgements because they are not forced to score each work on a (long) list of criteria. Each work is presented multiple times to multiple assessors. Finally, the tool constructs a scale on which the students’ works are ranked according to their quality.

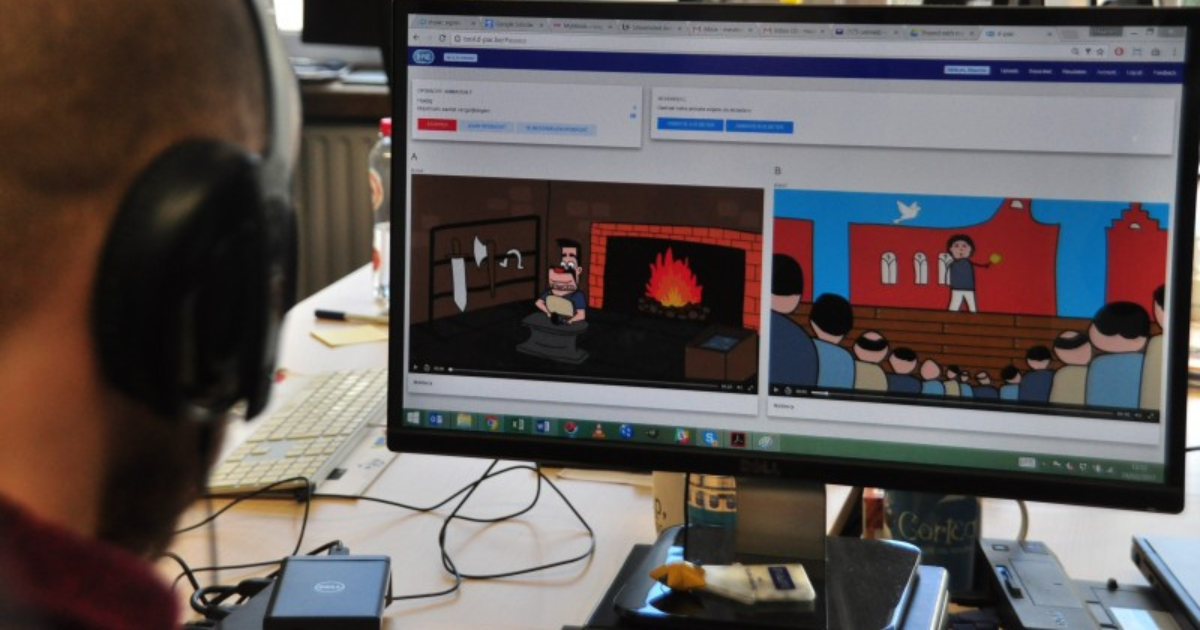

Assessing from home

Recently, Comproved has been used in a Bachelor Multimedia and Communication Technology for the assessment of students’ animation skills. Students received an audio fragment of the radio play by ‘Het Geluidshuis’ and had to accompany it with animation. A group of 9 assessors evaluated the quality of the animations. The assessors differed in background and expertise: 3 people from Het Geluidshuis, 2 expert animators, 2 alumni students, and 2 teachers.

For Ivan Waumans, coordinator of the course, working with Comproved was really easy and fast. “About 2 hours after I sent the login information to the assessors I got an email from one of them saying: Done!” Assessors valued that they could do the evaluations from their homes or offices. Some assessors did all the comparisons in one session, whereas others spread their comparisons over a few days. None of them had any trouble using or understanding Comproved.

The only difficulty the assessors experienced was when they had to choose between 2 videos that were of equal quality. Ivan had to reassure them that it was OK to just pick one of them, because the tool generates the same ability score for videos of equal quality. Ability scores represent the likelihood that a particular video will win from others. Based upon these scores the tool provides a ranking order in which videos are ordered from poor to high quality.

An objective assessment method

Ivan and his team assigned grades to the animations based upon the order and ability scores. As there were gaps between ability scores, the final grades were not equally distributed over the ranking order. For instance, the top 2 videos got 18/20 and 16/20. Teachers were happy with this more objective grading system. “When I look at certain videos and their grade, I notice that I would have given a higher or lower grade depending on my personal taste or the relation with the students”, Ivan explained. He experienced that by including external people in the evaluation, this bias was eliminated.

There were only 2 students who were a bit disappointed about the grade they received. But after explaining the procedure of comparative judgement, they accepted their grade. The fact that 9 people contributed in ranking the videos, instead of only one teacher, convinced them the grade was fair.

Would you like assess videos in an easy way? Contact us, we’ll be happy to help you!

This article is an adaptation of a blog that appeared previously on Media & Learning.