03 Jul Opinion: Pupils are just like shoes

Roos Van Gasse sees teachers coming up with new ways to test students’ knowledge, but not to grade their exams.

this is a translation of an article published on 1 June 2018 in De Standaard

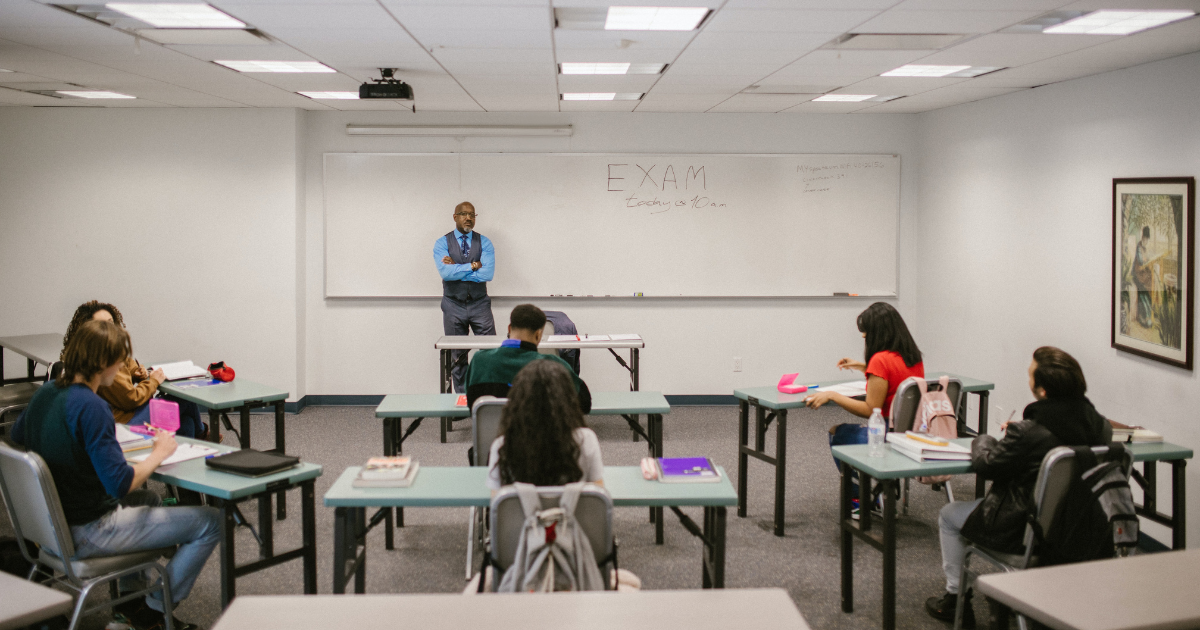

The examination period is just around the corner. The teacher has the important task of judging whether students have the required competences to start the following year. But what are these judgements worth from an academic point of view?

We know that teachers have to plough through a lot of remedial work during the examination period. That is why the exams look accordingly: closed knowledge questions, fill-in-the-blank questions and multiple choice. These are manageable to grade in large numbers and are reliable measurements at the same time. But research has repeatedly shown that such questions are strongly focused on reproduction and therefore do not give a good picture of students’ understanding of the subject matter. Let alone of their ability to apply knowledge, or to use it in other contexts. They do not match the expectations of training students to become competent and self-regulated learners. Assessing whether they have more generic competences to pick up, apply and process new knowledge on their own requires more complex, open-ended tasks. Think of writing essays on particular topics or integrated tests.

Class council

‘We are evolving towards this,’ many teachers may now be thinking. But if you use new question formats, you also need to rethink how you will assess those products. In the case of open tasks, research tells us that common assessment methods do not work well. Different teachers sometimes assess the same task very differently, and teachers’ judgements can vary greatly depending on whether they are hungry or have just eaten a delicious three-course meal. And even if teachers explicitly agree on what criteria to assess those open-ended tasks by, there is evidence that teachers vary widely in exactly what (or which criteria) they assess. We find that kind of unreliable and non-valid measurement very problematic in research.

It is on such measurements that we currently base decisions about learning careers and life courses. Of course, class councils have an important role to play here, supplementing examination results with observations throughout the school year. Nevertheless, it is appropriate to reflect for a moment on the quality of assessments. Especially given the amount of time, energy, good intentions and stress involved in a phenomenon like examination periods. Because we know that there are usually some flaws with assessments.

Thinking critically

However, there are alternative assessment methods that are better suited to how the human brain works. By nature, we make comparisons to make decisions. When you go to buy a pair of shoes, for example, you are unlikely to take a criteria list to the shoe shop on which you judge all shoes. What you are more likely to do is compare: the pairs of shoes among themselves or a pair of shoes with an ideal image in your mind.

When teachers assess, they do the same thing. Give them one task and it is very difficult to make a quality assessment, offer them tasks in pairs and they immediately see which task is of higher quality. Because of their expertise, teachers can see at a glance which opinion piece is better argued, which strategy is better for solving a mathematical problem, which source research is more thorough or which visual work shows more creativity. By having teachers make several such comparisons together, a ranking of quality can be established through a statistical algorithm. This makes it easier to assess, which also makes the measurements more reliable and valid.

If we want to avoid mass hysteria in June, it is necessary to think critically about the quality of measurement. Because if we continue to dabble with assessment methods that do not quite match how our education is evolving, what is the teacher’s judgement worth?