01 Sep Comparing tool makes selection procedure easier and more reliable

Radboud University used the Comproved comparing tool for the Bachelor of Psychology selection procedure. Wondering how that worked exactly? Do read on!

Summary

Application: summative assessment

Subject: Bachelor Psychology selection procedure

Assessment size: 1500 products

LMS: Brightspace

The Admissions Office Social Sciences at Radboud University must review each year about 1,500 candidate students’ cover letters for the Bachelor of Psychology program. Previously, the assessors evaluated those letters using a rubric. That took a lot of work. Moreover, there were often large differences between the evaluations, which resulted in low inter-rater reliability. In a search for a more efficient and reliable method of evaluating letters, the Admissions Office turned to Comproved.

Selection procedure

For the selection procedure, candidate students are assessed on an online psychology test, the grades they obtained during their previous education and on a so-called matching assignment. In the matching assignment, candidates have to argue why they choose Psychology at Radboud and what they have to offer to the program. It was that letter that was assessed with Comproved.

Assessment with comparing tool

A total of 28 reviewers participated in the assessment. Among them were 13 faculty members from the program, 5 research master students and 10 staff members from the Department of Psychology and the Admissions Office Social Sciences. The assessors were presented with the letters in randomly constructed pairs. Based on the holistic question, “Who are you selecting for our program?”, the assessors had to indicate for each pair the letter from the student they felt best fit the program. Thus, each letter was reviewed multiple times, each time in a different pair and by multiple assessors. Each assessor had to make 400 comparisons. With all that data, the comparing tool generated a ranking that ranked the letters from least good to best.

Results

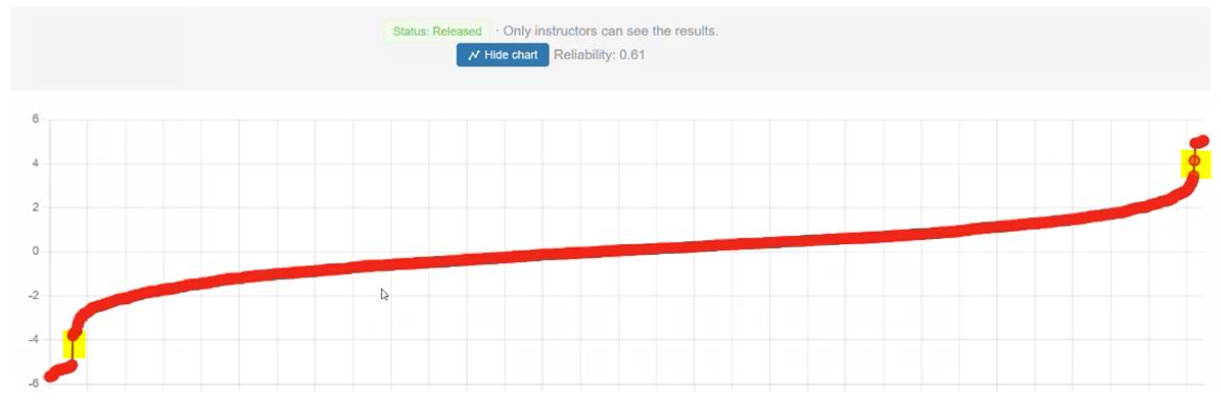

- Reliability: The reliability of the ranking was 0.61 which was a great improvement compared to previous years.

- Scores: An additional advantage of the ranking are the unique scores (in this case out of 100) with many decimal places. This saved the coordinators a lot of time in grading. Previously, they had to look at an additional assignment from the test when students arrived at the same final score.

- Efficiency: The total number of hours spent was greater than with the old method. To make the assessment more efficient, the Admissions Office suggested looking at the selection of the group of assessors. In fact, there were large differences in the time available and the average time required for review. In addition, one of the assessors suggests making the matching assignment clearer, more distinctive and shorter for a more efficient review process.

Assessors’ experiences

The assessors liked the system and the assessment concept. It worked easily, quickly and intuitively. The idea that their assessment is not the only one, but that several people are looking at the letter, was felt to be positive. Some of the assessors did get the feeling that the letters could not be given enough attention because the grading is so fast.

Furthermore, many of the assignments are not very distinctive, as seen by the ranking. One of the reviewers therefore suggested the following: “Perhaps grading could be made easier by adjusting the assignment. I found that I often quickly read through the section on “Why do you choose Radboud?” because many students gave the same reasons there and it did little to determine which student I thought was more suited to the program. In that sense, that part of the assignment was not very distinctive, but it did take time to scan the assignment for the relevant information (…) If the assignment could be focused a little more on the core (and maybe max half a page), then grading might be even easier.”

Conclusion

Overall, the findings with Comproved are very positive. The Admissions Office is very pleased with the inter-rater reliability. They are looking at how to better allocate hours and task load going forward. With some modifications to the assignment, the review process should be able to be significantly more efficient.

Do you think the comparing tool can also make a selection procedure in your institution more reliable and efficient? Don’t hesitate to contact us for an exploratory discussion.